The Future of Product Metrics is Here: Meet TARS

Evaluating Feature Effectiveness: The Key to Achieving Balance

As a product manager, I’ve always had an unhealthy obsession with metrics. They are like a siren call, drawing me in with promises of success and customer satisfaction.

I’ve spent countless hours poring over spreadsheets and statistics, searching for the elusive formula to unlock the secrets of product success and customer satisfaction. But in reality, I was often lost, chasing after ever more convoluted metrics and segmentations.

But then, last year, salvation came in the form of TARS — Target Users, Active Users, Retention, and Satisfaction — a framework developed by the wise sages at Reforge. It helped me break free from the shackles of misleading metrics like NPS or revenue and instead focus on the true heart of product success.

TARS is more than just a collection of metrics; it’s a framework that allows us to evaluate features and answer the crucial question, “What does a good outcome for my feature look like?”.

For those willing to embrace it, TARS can be a game-changer, allowing us to navigate the complex landscape of product management with greater clarity and purpose. It’s a powerful tool that I’ve come to rely on as I strive to foster team collaboration and develop products that truly delight users, and, now, I hope, yours as well.

Mastering the TARS Framework: A Four-Step Guide

As a product manager, TARS allows you to delve deep into the four critical areas of Target Audience, Acquisition, Retention, and Satisfaction, giving you a comprehensive view of which features are performing well and which are not.

TARS offers a holistic approach to product management in a world of constant innovation and disruption, providing a framework for understanding and evaluating feature performance beyond revenue and NPS’s superficial metrics.

The problem with business-level metrics like NPS is that they don’t provide an accurate view of how well a product performs.

For example, you could have a product that sold tons of licences last quarter, but your users could still hate it. While the sales team are celebrated like heroes with champagne and confetti, your users are left struggling with crippled features and password resets. On the other hand, you may have a product not meeting its revenue targets, with leadership continually threatening to move it into the dreaded “maintenance mode”. Still, your users love your features and can’t get enough of them.

Without a consistent system of evaluating feature performance, we are forced to rely on product-wide metrics to make decisions.

It’s nobody’s fault that most businesses operate this way; measuring things like NPS and revenue is easy, but proper feature evaluation is challenging. That’s where TARS comes in, helping align your product strategy with business goals and user needs.

Let’s break down the steps.

1. Target Audience

As product managers, we must understand the problem we are trying to solve and for whom.

The first stage of the TARS framework focuses on this by first encouraging us to quantify the target audience by asking, “What percentage of all my product’s users have this specific problem my feature aims to solve?” For example, if we wanted to build an Export Button for our search results page, we may know from user interviews that around 10% of all users need to use their search results in another format to perform various offline tasks. In this case, our target audience would be 10%.

However, if we do not have access to research, we can approximate the target audience from existing product analytics. For example, I could see what % of users engage with an existing or similar feature that may be trying to solve the same problem within a given month or week.

It is important to note that while analytics can provide valuable insights, we should always use reasonable assumptions and supporting user research where possible. For example, let’s say I have an existing Export Button feature on my search results page, and I can see that 5% of all users engage with it, but to say the target audience is 5% is not entirely correct. I should consider that more users have the problem that my export feature is trying to solve.

But what if we have no idea what % of all users have the specific problem our feature aims to solve? I suggest you stop reading this article, walk to the nearest reflective surface of choice, look yourself in the eye and chant, “Stop, torturing, yourself!” Acknowledge what you are about to do is a gamble, which is fine as long as we all acknowledge it.

Regardless of your approach, always try to answer this question, “What does a good target audience % look like?”. If your feature is only used by 1% of users, would you be happy with that outcome?

2. Feature Adoption

The second step in the TARS framework is to evaluate how well we are acquiring our target audience.

Once we’ve pinpointed the percentage of our audience that has the problem our feature aims to solve, the next step is to determine how many of them actually use our feature to solve that problem. This is known as user adoption, i.e. the number of users who engage with and utilise our feature in a meaningful way.

User adoption is crucial to understand, as it allows us to align our expectations with those of our users and see if they are using our feature as we anticipated. To determine this, we can track the adoption rate of our feature by monitoring the number of unique users who engage with it over a specific time period.

If we look at our export button example again, the question to ask is: what % of users exported successfully? If it’s a search product, what % of users engaged with the search results? If it’s a GPT-powered text generation tool, how many users produced more than 500 words with it and saved the results? If it’s a chat platform, how many users used your channel-sharing feature to share a channel? These are examples of meaningful user engagement.

Once we have identified what adopted behaviour looks like, it’s simply a matter of dividing the number of adopted users by the number of active target users and boom, you have your feature adoption.

So what is this telling me? Well, even within a specified target audience, not all users may care about the problem our feature aims to solve in the same way. Measuring feature adoption allows us to align our expectations with those of our users and see if they’re using our feature as we anticipated. Is the feature adoption less, more or as we expected of user behaviour?

One excellent thing about measuring feature adoption is that it encourages product teams to align around what a good outcome looks like. I’m constantly surprised by how differently some people interpret what success looks like for a product, so setting expectations with a framework like TARS can help save many headaches later down the line.

The adoption percentage of a feature is typically a good indicator of how severe the problem you are trying to solve really is. If adoption is lower than expected, this may mean the problem has easy workarounds, and we should expect lower adoption. If adoption is higher than expected, we may be working on a problem that significantly inhibits users from experiencing your product.

It’s essential to remember that low adoption doesn’t necessarily mean failure, and a high adoption doesn’t necessarily mean success. For example, low adoption may mean the feature was not surfaced well during user journeys or that we over-scoped the target population. Regardless, it’s all about identifying adoption patterns as soon as possible to build strategies for improvement.

3. Feature Retention

Retention is a crucial indicator of whether our feature solves the problem it was designed for and achieves product-market fit. When evaluating retention, we must ask ourselves, “Of all the unique visitors who meaningfully adopted our feature, how many came back to use it again?”

First, it’s essential to understand the natural frequency of your feature. Does your feature solve a problem that users encounter daily, weekly, monthly, or even yearly? If user research can’t help you answer this question, don’t fret. Measuring retention for a live feature is relatively straightforward. Perform a cohort analysis, graph the curve over time, and see if the retention curve is flattened.

The initial logarithmic decline from initial adoption occurs because after users adopt a feature, it still requires an effort to build a habit around using the feature. We want a flattened retention curve based on your user’s acquisition action — stable retention. Once the curve’s flattening is identified, we can establish your feature’s natural frequency use and benchmark if the expected number of users use the same feature weeks or months later.

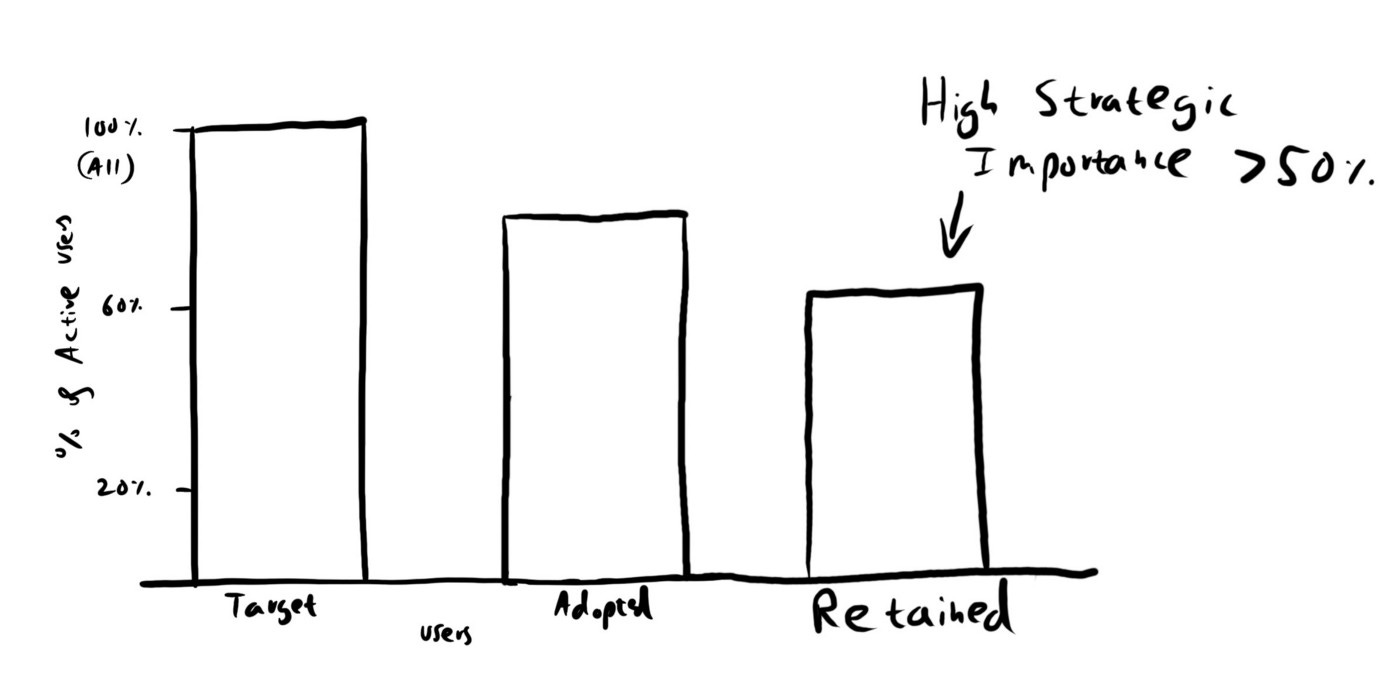

If you observe that your feature has a 50% or greater retention of all your adopted users, you can be pretty confident you have a feature of high strategic importance. A 25–35% retention rate is likely of medium strategic significance, and retention of 10 -20% is of low strategic importance.

One important thing to bare in mind when reading retention curves is observing a retention curve that never stabilises — it just keeps declining and never plateaus. It means that no share of our target users can help build a sustainable habit around this feature, likely indicating that the feature is not solving the desired problem for our users.

4. Satisfaction

As a product manager, it can be disheartening to realise that sometimes, despite all our best efforts, we’re actually making things worse. And it’s not always obvious — after all if our adoption and retention metrics look good, shouldn’t that mean we’re doing something right? But as I learned the hard way, it’s not always that simple.

You see, sometimes users don’t have a choice — they’re stuck using a product that drives them crazy, whether it’s because their bank has a great interest rate, their family only uses Facebook messenger, or their company makes them use Workday. These are what we like to call “Hidden Detractors” — features that people use but that are frustrating experiences. And trust me, these Hidden Detractors can come back to bite us if we’re not careful.

The problem is product teams often miss these Hidden Detractors, and as a result, they create frustration for their users. And then, one day, somebody launches a marginally better product, and all their users jump ship, leaving the original product team scratching their heads and wondering what went wrong.

So how do we avoid this pitfall? By measuring satisfaction, and not just any satisfaction, the standardised survey known as the Customer Effort Score (CES). According to Gartner research, 96% of customers who identify a product or service experience as high effort become disloyal, compared to only 9% who have a low effort experience.

The CES survey is a standard methodology that effectively asks some variation of the question, “How easy or difficult was it to complete a task?” Users can then respond on a scale of 1–5, from “more difficult than expected” to “much easier than expected”. Anything that scores 3–5, i.e. “as expected” to “much easier than expected”, should be considered a “satisfied” user experience.

For CES to be most effective, our surveys should target only our retained users. This allows us to understand if there are any hidden problems not reflected in the features retention while focusing on improving retention independently.

Now, it’s important to remember that survey data is just a snapshot of our target audience, so we should take any feedback with a pinch of salt. But by setting up a “feedback river” where we periodically collect and summarise qualitative user feedback, we can build up product intuition and make informed decisions.

So let’s not just focus on the numbers but on the people. By measuring satisfaction, we can ensure that our features are used and loved.

Hidden fifth step: Pulling all the metrics together

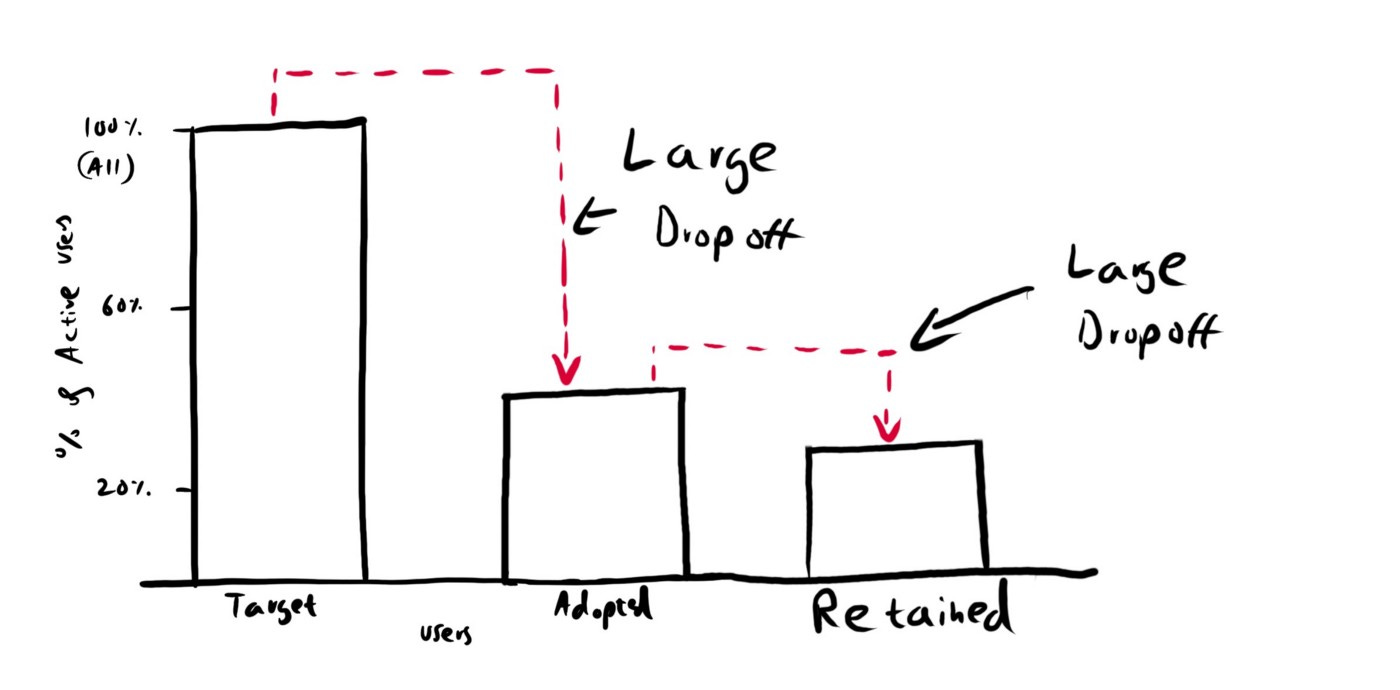

Finally, the piece de resistance, the cherry on top, the icing on the cake — pulling all the TARS metrics together into a funnel chart.

This visualisation is like a roadmap to product success, showing you exactly where you’re hitting the mark and where you’re falling short. And let me tell you, it’s a lot more effective than explaining it all with a bunch of numbers and jargon.

Now, as you might expect, you’ll see some loss of users at each step. But if you see a particularly steep drop-off in one area, that’s a red flag that something needs attention. It’s like a treasure map, pointing you straight to the areas that need the most work.

But the real beauty of the funnel chart is that it’s not just a diagnostic tool but also a way to track progress over time. As you make changes and improvements, you can see the effect they have on the numbers, and it’s a real morale boost to see those sections of the funnel getting bigger.

Using TARS for feature strategy

As a product manager, I often find myself in a pickle when getting my teams to row in the same direction. When planning a quarter, I want healthy debate and engagement from my engineers, designers, data scientists, and product leaders about the bets we want to take. But all too often, we set lofty goals like “increase revenue by 12% in 8 months” and hope for the best. This approach can lead to short-sighted actions prioritising short-term performance over long-term learning.

Thankfully, TARS is an excellent tool for avoiding such pitfalls.

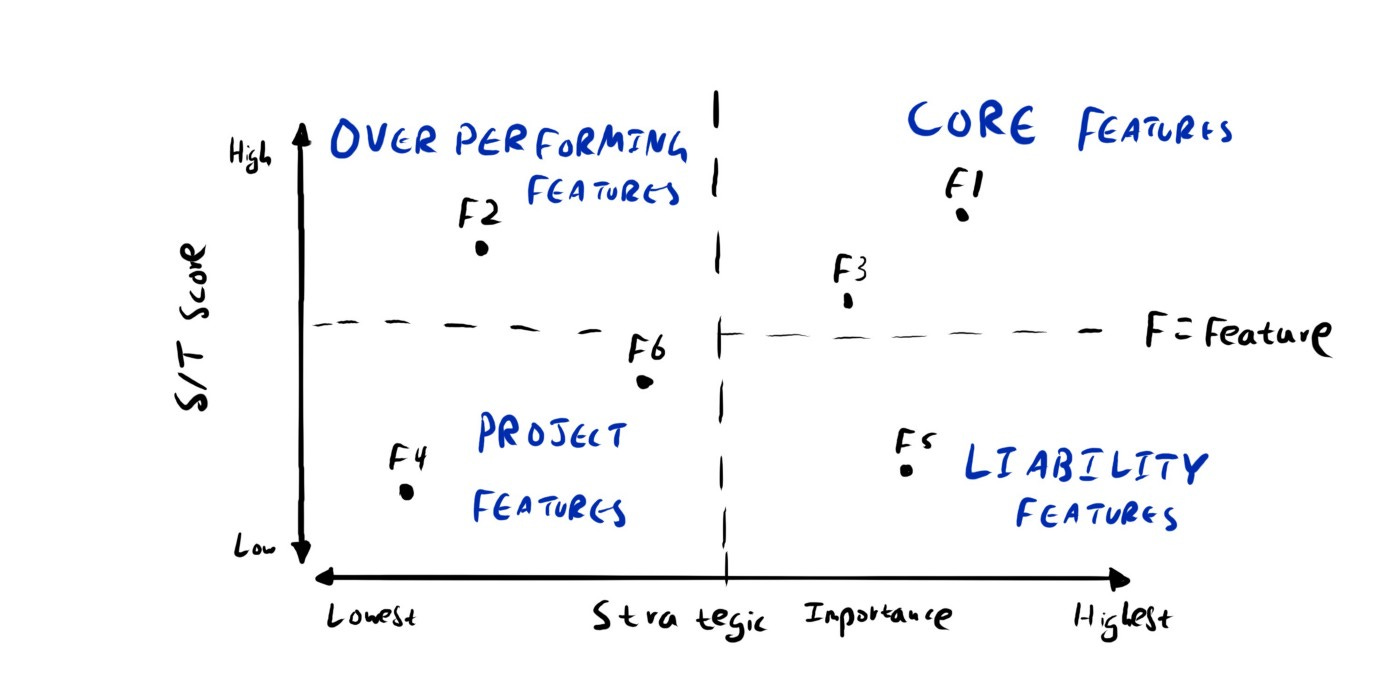

See, TARS is more than a collection of metrics; we can divide the % of satisfied users by the % of target users for any given feature to create something called an S/T score. For example, if we had a TARS set up for a feature with a target audience of 80%, and then 50% of the target audience adopted, or 40% of the total, and then 70% of adopted users retained, which is 28% of the total, and then 90% of retained users satisfied, the S/T score for this feature would be 25% divided by 80%, which is 31%.

By itself, this S/T is not very exciting. But things get interesting when we apply the same calculation to all our other significant features to create a feature matrix comparison chart.

This chart plots our S/T scores on the y-axis and the feature’s strategic importance within our product portfolio on the x-axis. The more strategically important, the more it will be plotted to the right. This allows us to evaluate features against four criteria: over-performing features, core features, project features, and liability features.

Effectively we are plotting everything out on a 2x2 matrix from which we can evaluate features against one of four criteria: over-performing features, core features, project features and liability features.

Each quadrant has an exciting story to tell, and I’ve discovered that just sitting down with a team and looking at this chart is enough to stimulate some very spicy conversations about what’s crucial, what’s not and “why did we spend so much time on a feature that 2% of users use?”

But from a product manager’s perspective, the feature area we should be most interested in is liabilities. Liability features, in particular, require a lot of attention and effort to move them into a more helpful category. By identifying these features, we can focus our efforts on the areas that will significantly impact product growth.

The feature matrix comparison chart is an excellent example of how TARS forces us to take a step back and view our product from a three-dimensional perspective. It requires a high level of maturity from a team to acknowledge and take ownership of what is important and worth working on. But by implementing TARS, we can make informed decisions about what to prioritise and develop, leading to a winning product strategy.

The Dark Side of Feature Metrics: How Over-Reliance Harms

During the colonial occupation of India, the British were getting really concerned about the number of venomous cobras in Deli. To solve the problem, the British government offered a bounty for every dead cobra brought to them.

Initially, this was successful, with many snakes killed for the reward.

Eventually, however, some enterprising people decided to breed cobras to collect the bounty. When the government found out, they scrapped the reward program, and the cobra breeders set their now-worthless snakes free, making the problem even worse.

It’s an entertaining story with a powerful message: if not set up correctly, any metric of success will reward people for making it worse. Suppose I tell you to increase retention on a product, for example, without any other parameters of success like engagement. What’s to stop you from sending out a bunch of marketing emails, hoping a user eventually clicks one.

Thankfully we can avoid these pitfalls by utilising a balanced approach, like TARS, that takes a more holistic approach to measure success and avoid rewarding the wrong behaviour.

But even with the best of intentions, metrics can be limiting. Metrics as targets always risk leading to unintended consequences that negatively impact the prolonged success of products when we follow the letter and not the spirit of what those metrics aim to achieve: to solve valuable problems for our users.

It’s important to remember the saying, “What’s measured gets managed.” We must not be limited by the views of our dashboards and always strive to check if we have the proper perspective on the problems we aim to solve. When something goes right, we must ask ourselves why, and when something goes wrong, we must ask ourselves why.

By being vigilant and thoughtful, we can avoid the dark side of feature metrics and build a winning model for our products.

TARS is a powerful tool that helps us steer clear of the pitfalls of our well-intentioned goals. As a product manager, nothing brings me more joy than delving deep into customer insights and understanding their psychology and motivations. It’s in our nature to crave measurements, from the notches on walls that marked our growth to the grades we received on spelling tests. But, as we all know, mastering the art of monitoring and optimising metrics doesn’t always translate to long-term business success.

In a world where our brains aren’t wired to make decisions based on statistics, frameworks like TARS are our best bet. Without acknowledging and regularly reviewing our metrics and user feedback, we risk atrophying our product intuition by losing touch with what our users truly want. It’s like going to the gym — it may not be pleasant in the moment, but the results are usually worth it.

So, whether you take away just a few ideas from TARS or decide to implement it fully, remember that simply reflecting and agreeing on what good looks like for the performance of our features is already a step in the right direction.

Happy metricing everyone. Lets TARS a path to success!

Further Reading

High-performing product teams: Building products customers love

Hacking Growth — How Today’s Fastest-Growing Companies Drive Breakout Success (Book)